I have been thinking about the role of “attention” in personal safety lately. I can’t tell you how many times I have heard supervisors say…”He wouldn’t have gotten hurt if he had just been paying attention.” In reality, he was paying attention, just to the wrong things. Let me illustrate this with a brief observation. Two of my grandsons (ages 4 and 6) play organized baseball. The 4-year old plays what is called Tee-ball. It is Tee-ball because the coach places the ball on a chest high Tee and the batter attempts to hit the ball into the field of play where there are players on the opposing team manning the normal defensive positions. It is my observation of the players on defense that has helped me understand attention to a greater depth. Most of the batters at this age can’t hit the ball past the infield and most of them are lucky to get it to the pitchers mound, so the outfielders have very little chance of actually having a ball get to them and they seem to know this. For the most part, the “pitcher” (i.e., the person standing on the mound) and to some extent the other in-fielders watch the batter and respond to the ball. The outfielders however are a very different story. They spend their time playing in the dirt, rolling on the ground, chasing butterflies or chasing each other. When, on the rare occasion that a ball does get to the outfield the coach has to yell instructions to his outfielders to get them to look for the ball, pick it up and throw it to the infield. There is a definite difference of attention between the infield and the outfield in Tee-ball. This is not the case in the “machine-pitch” league that my 6-year old grandson plays in however. For the most part all of the defensive players seem to attend to the batter and respond when the ball is hit. So what is the difference? Obviously there is a maturational difference between the 4/5-year olds and the 6/7-year olds but I don’t think this explains all of the attentional difference because even Tee-ball players seem to pay more attention when playing the infield. I think much of it has to do with expectations and saliency. Attention is the process of selecting among the many competing stimuli that are present in one’s environment and then processing some while inhibiting the processing of others. That selection process is driven by the goals and expectations that we have and the salience of the external variables in our environment. The goal of a 4-year old “pitcher” is to impress her parents, grandparents and coach and she expects the ball to come her way, thus attention is directed to the batter and the ball. The 4-year old outfielder has a goal of getting through this inning so that he can bat again and impress his audience knowing that the probability of having a ball come his way is very small. The goals and expectations are different in the infield and outfield so the stimuli that are attended to are different. The same is true in the workplace. What is salient, important and obvious to the supervisor (after the injury occurred) are not necessarily what was salient, important and obvious to the injured employee before the injury occurred. We can’t attend to everything, so it is the job of the supervisor (parent; Tee-ball coach) to make those stimuli that are the most important (e.g., risk in the workplace, batter and ball in the Tee-ball game) salient. This is where the discussions that take place before, during and after the job are so important to focusing the attention of workers on the salient stimuli in their environment. Blaming the person for “not paying attention” is not the answer because we don’t intentionally “not pay attention”. Creating a context where the important stimuli are salient is a good starting point.

Lone Workers and “Self Intervention”

We work with a lot of companies that have Stop Work Authority policies and that are concerned that their employees are not stepping up and intervening when they see another employee doing something that is unsafe. So they ask us to help their employees develop the skills and the confidence to do this with our SafetyCompass®: Intervention training program. Intervention is critical to maintaining a safe workplace where teams of employees are working together to accomplish results. However, what about situations where work is being accomplished, not by teams but by individuals working in isolation…..the Lone Worker? He or she doesn’t have anyone around to watch their back and intervene when they are engaging in unsafe actions, so what can be done to improve safety in these situations? It requires “self intervention”. When we train interventions skills we help our students understand that the critical variable is understanding why the person has made the decision to act in an unsafe way by understanding the person’s context. This is also the critical variable with “self intervention”. Everyone writing (me) or reading (you) this blog has at some point in their life been a lone worker. Have you ever been driving down the road by yourself? Have you ever been working on a project at home with no one around? Now, have you ever found yourself speeding when you were driving alone or using a power tool on your home project without the proper PPE. Most of us can answer “yes” to both of these questions. In the moment when those actions occurred it probably made perfect sense to you to do what you were doing because of your context. Perhaps you were speeding because everyone else was speeding and you wanted to “keep up”. Maybe you didn’t wear your PPE because you didn’t have it readily available and what you were doing was only going to take a minute to finish and you fell victim to the “unit bias”, the psychological phenomenon that creates in us a desire to complete a project before moving on to another. Had you stopped (mentally) and evaluated the context before engaging in those actions, you possibly would have recognized that they were both unsafe and the consequences so punitive that you would have made a different decision. “Self Intervention” is the process of evaluating your own personal context, especially when you are alone, to determine the contextual factors that are currently driving your decision making while also evaluating the risk and an approach to risk mitigation prior to engaging in the activity. It requires that you understand that we are all susceptible to cognitive biases such as the “unit bias” and that we can all become “blind” to risk unless we stop, ask ourselves why we are doing what we are doing or about to do, evaluating the risk associated with that action and then making corrections to mitigate that risk. When working alone we don’t have the luxury of having someone else watching out for us, so we have to consciously do that ourselves. Obviously, as employers we have the responsibility to engineer the workplace to protect our lone workers, but we also can’t put every barrier in place to mitigate every risk so we should equip our lone workers with the knowledge and skills to self intervene prior to engaging in risky activities. We need to help them develop the self intervention habit.

Are Safety Incentive Programs Counterproductive?

In our February 11, 2015 blog we talked about “How Context Impacts Your Motivation” and one of the contextual aspect of many workplaces is a Safety Incentive Program designed to motivate employees to improve their safety performance. Historically the “safety bonus” has been contingent on not having any Lost Time Injuries (LTI’s) on the team during a specified period of time. The idea is to provide an extrinsic reward for safe performance that will increase the likelihood of safe behavior so that accidents will be reduced or eliminated. We also concluded in that blog that what we really want is people working for us who are highly intrinsically motivated and not in need of a lot of extrinsic “push” to perform. Safety Incentive Programs are completely based on the notion of extrinsic “push”. So do they work? We know from research dating back to the 1960’s that the introduction of an extrinsic reward for engaging in an activity that is already driven intrinsically will reduce the desire to engage in that activity when the reward is removed. In other words, extrinsic reward can have the consequence of reducing intrinsic motivation. I don’t know about you, but I don’t want to get hurt and I would assume that most people don’t want to get injured either. People are already intrinsically motivated to be safe and avoid pain. We also know that financial incentives can have perverse and unintended consequences. It is well known that Safety Incentive Programs can have the unintended consequence of under reporting of incidents and even injuries. Peer pressure to keep the incident quiet so that the team won’t lose it’s safety bonus happens in many organization. This not only leads to reduced information about why incidents are occurring, but it also decreases management’s ability to improve unsafe conditions, procedures, etc. resulting in similar incidents becoming more likely in the future. Because of this, the Occupational Safety and Health Administration (OSHA) has recently determined that safety incentive programs based on incident frequency must be eliminated because of these unintended consequences. Their suggestion is that safety bonuses should be contingent on upstream activities such as participation in safety improvement efforts like safety meetings, training, etc. On a side note, in some organizations, the Production Incentive Program is in direct conflict with the Safety Incentive Program so that production outweighs safety from a financial perspective. When this happens production speed can interfere with focus on safety and incidents become more likely. Our View

It is our view that Safety Incentive Programs are not only unnecessary, but potentially counterproductive. Capitalizing on the already present intrinsic motivation to be safe and creating an organizational culture/context that fosters that motivation to work together as a team to keep each other safe is much more positive and effective than the addition of the extrinsic incentive of money for safety. We suggest that management take the money budgeted for the safety incentive program and give pay increases while simultaneous examining and improving organizational context to help keep employees safe.

Contrasting Observation and Intervention Programs - Treating Symptoms vs. the Cause

Our loyal readers are quite familiar with our 2010 research into safety interventions in the workplace and the resulting SafetyCompass® Intervention training that resulted from that research. What you may not know is why we started that research to begin with. For years we had heard client after client explain to us their concerns over their observation programs. The common theme was that observation cards were plentiful when they started the program but submissions started to slow down over time. In an attempt to increase the number of cards companies instituted various tactics to increase the number of cards submitted. These tactics included such things as communicating the importance of observation cards, rewards for the best cards, and team competitions. These tactics proved successful, in the short term, but didn’t have sustainable impact on the number or quality of cards being turned in. Eventually leadership simply started requiring that employees turn in a certain number of cards in a given period of time. They went on to tell us of their frustration when they began receiving cards that were completely made up and some employees even using the cards as a means to communicate their dissatisfaction with their working conditions rather than safety related observations. They simply didn't know what to do to make their observation programs work effectively. As we spoke with their employees we heard a different story. They told us about the hope that they themselves had when the program was launched. They were excited about the opportunity to provide information about what was really going on in their workplace so they could get things fixed and make their jobs safer. They began by turning in cards and waiting to hear back on the fixes. When the fixes didn’t come they turned in more cards. Sometimes they would hear back in safety meetings about certain aspects of safety that needed to be focused on, but no real fixes. A few of them even told us of times that they turned in cards and their managers actually got angry about the behaviors that were being reported. Eventually they simply stopped turning in cards because leadership wasn’t paying attention to them and it was even getting people in trouble. Then leadership started giving out gift cards for the best observation cards so they figured they would turn a few in just to see if they could win the card. After all, who couldn’t use an extra $50 at Walmart? But even then, nothing was happening with the cards they turned in so they eventually just gave up again. The last straw was when their manager told them they had to turn in 5 per week. They spoke about the frustration that came with the added required paperwork when they knew nobody was looking at the cards anyway. As one person put it, “They’re just throwing them into a file cabinet, never to be seen again”. So the obvious choice for this person was to fill out his 5 cards every Friday afternoon and turn them in on his way out of the facility. It seemed that these organizations were all experiencing a similar Observation Program Death Spiral.

The obvious question is why? Why would such a well intentioned and possibly game changing program fail in so many organizations? After quite a bit of research into these organizations the answer became clear, they weren’t intervening. Or more precisely, they weren’t intervening in a very specific manner. The intent of observation programs is to provide data that shows the most pervasive unsafe actions in our organizations. If we, as the thought goes, can find out what unsafe behaviors are most common in our organization, then we can target those behaviors and change them. The fundamental problem with that premise is that behaviors are the cause of events (near misses, LTA, injuries, environmental spills, etc.). Actually, behaviors themselves are the result of something else. People don’t behave in a vacuum, as if they simply decide that acting unsafely is more desirable than acting safely. There are factors that drive human behaviors, the behavior themselves are simply a symptom of something else in the context surrounding and embedded in our organizations. Due to this fact, trending behaviors as a target for change efforts is no different than doctors treating the most common symptoms of disease, rather than curing the disease itself.

A proper intervention is essentially a diagnosis of what is creating behavior. Or, to steal the phrase from the title of our friend Todd Conklin's newest book, a pre-accident investigation. An intervention program equips all employees with the skills to perform these investigations. When they see an unsafe behavior, they intervene in a specific way that allows them to create immediate safety in that moment, but they also diagnose the context to determine why it made sense to behave that way to begin with. Once context is understood, a targeted fix can be put into place that makes it less likely that the behavior happens in the future. The next step in an Intervention Program is incredibly important for organizational process improvement. Each intervention should be recorded so that the context (equipment issues, layout of workplace, procedural or rule discrepancies, production pressure, etc.) that created that behavior can be gathered and trended against other interventions. Once a large enough sample of interventions is created, organizations can then see the interworking of their work environment. Rather than simply looking at the total number of unsafe behaviors being performed in their company (e.g. not tying off at heights) they can also understand the most common and salient context that is driving those behaviors. Only then does leadership have the ability to put fixes into place that will actually change the context in which their employees perform their jobs and only then will they have the ability to make sustainable improvement.

Tying it back to observation programs

The observation program death spiral was the result of information that was not actionable. Once a company has data that is actionable, they can then institute targeted fixes. Organizations that use this approach have actually seen an increase in the number of interventions logged into the system. The reason is that the employees actually see something happening. They see that their interventions are leading to process improvement in their workplace and that’s the type of motivation that no $50 gift card could ever buy.

Crew Resource Management (CRM) and the Energy Industry

If you work in the airline or healthcare industries, you are probably already familiar with Crew Resource Management (CRM) training. CRM training was an outgrowth of evaluations of catastrophic airline crashes that were deemed to be due to “human error”. The original idea behind CRM was to capitalize on the knowledge and observations of other crew/team members when the pilot or doctor was seen doing something that could lead to an incident. The goal is to help crew members develop the skills necessary to successfully anticipate and recognize hazards and then correct the situation. Recently, the energy industry has begun to provide guidelines for member companies to implement CRM training in an attempt to avoid catastrophic events like the Macondo and Montara blowouts.* CRM training focuses on six non-technical areas needed to reduce the chances of “human error”. These six areas are:

- Situation Awareness This involves vigilance and the gathering, processing and understanding of information relative to current or future risk.

- Decision Making This involves skills needed to evaluate information prior to determining the best course of action, selecting the best option and implementing and evaluating decisions.

- Communication This involves skills needed to clearly communicate information, including decisions so that others understand their role in implementation. It also involves skills for speaking up when another person is observed acting in an unsafe manner.

- Teamwork This involves an understanding of current team roles and how each individual's performance and interaction with others (including conflict resolution) can impact results.

- Leadership This involves the skills and attributes needed to have others follow when necessary. It also includes the ability to plan, delegate, direct and facilitate as needed.

- Factors that impact human performance Typically this category has focused on stress and fatigue as contributors to unsafe actions or conditions. However, drawing from the wealth of Human Factors research, we view this category more broadly and feel that it includes the many ways in which human performance is impacted by the interaction between people and their working contexts.

We have been writing on these skill areas in our blogs and newsletters for several years and thought that some of our work on these subjects might be beneficial to our readers who are either currently working to implement CRM training or evaluating the need to do so. If you have been following our writings, you will already know that we take a Human Factors approach to performance improvement (including safety performance), which involves an understanding of the contextual factors that impact performance deemed to be “human error”. It is our view that, while human error is almost always a component of failure, it is seldom the sufficient cause. We hope that this link to our archive of Crew Resource Management related posts will be useful and thought-provoking. For ease of access, you can either click on one of the six CRM skill sets described above, or the Crew Resource Management link, which includes all related writings from the six skill sets.

*OGP: Crew Resource Management for Well Operations, Report 501, April, 2014. IOGP: Guidelines for implementing Well Operations Crew Resource Management training, Report 502, December, 2014 The EI Report: Guidance on Crew Resource Management (CRM) and non-technical skills training programmes, 1st edition, 2014.

Why It Makes Sense to Tolerate Risk

Risk-Taking and Sense-Making Risk tolerance is a real challenge for nearly all of us, whether we are managing a team in a high-risk environment or trying to get a teenager to refrain from using his cellphone while driving. It is also, unfortunately, a somewhat complicated matter. There are plenty of moving parts. Personalities, past experiences, fatigue and mood have all been shown to affect a person’s tolerance for risk. Apart from trying to change individuals’ “predispositions” toward risk-taking, there is a lot that we can do to help minimize risk tolerance in any given context. The key, as it turns out, is to focus our efforts on the context itself.

If you have followed our blog, you are by now familiar with the idea of “local rationality,” which goes something like this: Our actions and decisions are heavily influenced by the factors that are most obvious, pressing and significant (or, “salient”) in our immediate context. In other words, what we do makes sense to us in the moment. When was the last time you did something that, in retrospect, had you mumbling to yourself, “What was I thinking?” When you look back on a previous decision, it doesn’t always make sense because you are no longer under the influence of the context in which you originally made that decision.

What does local rationality have to do with risk tolerance? It’s simple. When someone makes a decision to do something that he knows is risky, it makes sense to him given the factors that are most salient in his immediate context.

If we want to help others be less tolerant of risk, we should start by understanding which factors in a person’s context are likely to lead him to think that it makes sense to do risky things. There are many factors, ranging from the layout of the physical space to the structure of incentive systems. Some are obvious; others are not. Here are a couple of significant but often overlooked factors.

Being in a Position of Relative Power

If you have a chemistry set and a few willing test subjects, give this experiment a shot. Have two people sit in submissive positions (heads downcast, backs slouched) and one person stand over them in a power position (arms crossed, towering and glaring down at the others). After only 60 seconds in these positions, something surprising happens to the brain chemistry of the person in the power position. Testosterone (risk tolerance) and cortisol (risk-aversion) levels change, and this person is now more inclined to do risky things. That’s right; when you are in a position of power relative to others in your context, you are more risk tolerant.

There is an important limiting factor here, though. If the person in power also feels a sense of responsibility for the wellbeing of others in that context, the brain chemistry changes and he or she becomes more risk averse. Parents are a great example. They are clearly in a power-position relative to their children, but because parents are profoundly aware of their role in protecting their children, they are less likely to do risky things.

If you want to limit the effects of relative power-positioning on certain individuals’ risk tolerance - think supervisors, team leads, mentors and veteran employees - help them gain a clear sense of responsibility for the wellbeing of others around them.

Authority Pressure

On a remote job site in West Texas, a young laborer stepped over a pressurized hose on his way to get a tool from his truck. Moments later, the hose erupted and he narrowly avoided a life-changing catastrophe. This young employee was fully aware of the risk of stepping over a pressurized hose, and under normal circumstances, he would never have done something so risky; but in that moment it made sense because his supervisor had just instructed him with a tone of urgency to fetch the tool.

It is well documented that people will do wildly uncharacteristic things when instructed to do so by an authority figure. (See Stanley Milgram’s “Study of Obedience”.) The troubling part is that people will do uncharacteristically dangerous things - risking life and limb - under the influence of minor and even unintentional pressure from an authority figure. Leaders need to be made aware of their influence and unceasingly demonstrate that, for them, working safely trumps other commands.

A Parting Thought

There is certainly more to be said about minimizing risk tolerance, but a critical first step is to recognize that the contexts in which people find themselves, which are the very same contexts that managers, supervisors and parents have substantial control over, directly affect people’s risk tolerance.

So, with that “trouble” employee / relative / friend / child in mind, think to yourself, how might their context lead them to think that it makes sense to do risky things?

A Personal Perspective on Context and Risk Taking

Most of our blog posts focus on current thinking about various aspects of safety and human performance and are an attempt to not only contribute to that discussion but to generate further discussion as well. I can’t think of an instance when we took a personal perspective on the subject, but an experience that I had a couple of weeks ago got me thinking about willingness to take risk and how context really does play a crucial role in that decision. I was attending a weekend long family reunion in the Texas hill country where we had 25 family members all staying together in a lodge that we had rented. It was a terrific weekend with a lot of food, fun, reminiscing and watching young cousins really get to know each other for the first time. My nephew brought his boat so that the adventuresome could try their hand at tubing on the river that ran by the property. I decided that since I had engaged in this activity many times in the past that I would simply act as a spotter for my nephew and watch my kids and their kids enjoy the fun. (Actually I was thinking that the rough water and bouncing of the tube would probably have my body hurting for the next week. This, I contend was a good evaluation of risk followed by good decision making).

There was also a rope swing attached to a tree next to the water allowing for high flight followed by a dip in the rather cold river water that attracted everyone to watch the young try their hand at this activity. There were actually two levels from which to begin the adventure over the water, one at the level of the river and one from a wall about 10-feet higher. All of the really young and really old (i.e. my brother-in-law) tried their hand at the rope from the level of the water and all were successful including my older brother-in-law. I arrived at the rope swing shortly after he had made his plunge only to have him and his supporting cast challenge me to take part. I told them that I would think about it and this is where “context” really impacted my decision to take a risk. The last time I had swung on a rope and dropped into water was probably 20 years ago. At that time I would swing out and complete a flip before I entered the water. No reason not to do the same thing now….right? No way I could accomplish this feat in front of my wife, sister, children, grandchildren, nieces and nephews, not to mention my brother-in-law, by starting from the waters edge. It would have to be from the 10-foot launching point. In my mind, at that moment this all sounded completely reasonable, not to mention fun! As I took my position on the wall I was thinking to myself that all I needed to do was perform like I did last time (20 years ago) and everything would be great. I was successful in getting out over the water before letting go, (needless to say that I didn’t perform the flip that I had imagined…..seems that upper body strength at 65 is less than at 45). I’m not sure how it happened, but I ended up injuring the knuckle on one of my fingers and I woke up the next morning with a stiff left shoulder. By the way, two weeks later I am feeling much better as the swelling in my finger and stiffness in my shoulder are almost gone.

As I reflect on the event, I am amazed at how the context (peer pressure, past success, cheering from my grandchildren, failure to assess my physical condition, etc) led to a decision that was completely rational to me in the moment. I am pretty sure that the memory of the pain for the next several days afterwards will impact my decision making should such an opportunity arise again. Next time I will enter from the waters edge!

Hardwired Inhibitions: Hidden Forces that Keep Us Silent in the Face of Disaster

Employees’ willingness and ability to stop unsafe operations is one of the most critical parts of any safety management system, and here’s why: Safety managers cannot be everywhere at once. They cannot write rules for every possible situation. They cannot engineer the environment to remove every possible risk, and when the big events occur, it is usually because of a complex and unexpected interaction of many different elements in the work environment. In many cases, employees working at the front line are not only the first line of defense, they are quite possibly the most important line of defense against these emergent hazards. Our 2010 study of safety interventions found that employees intervene in only about 39% of the unsafe operations that they recognize while at work. In other words, employees’ silence is a critical gap in safety management systems, and it is a gap that needs to be honestly explored and resolved.

An initial effort to resolve this problem - Stop Work Authority - has been beneficial, but it is insufficient. In fact, 97% of the people who participated in the 2010 study said that their company has given them the authority to stop unsafe operations. Stop Work Authority’s value is in assuring employees that they will not be formally punished for insubordination or slowing productivity. While fear of formal retaliation inhibits intervention, there are other, perhaps more significant forces that keep people silent.

Some might assume that the real issue is that employees lack sufficient motivation to speak up. This belief is unfortunately common among leadership, represented in a common refrain - “We communicated that it is their responsibility to intervene in unsafe operations; but they still don’t do it. They just don’t take it seriously.” Contrary to this common belief, we have spoken one-on-one with thousands of frontline employees and nearly all of them, regardless of industry, culture, age or other demographic category, genuinely believe that they have the fundamental, moral responsibility to watch out for and help to protect their coworkers. Employees’ silence is not simply a matter of poor motivation.

At the heart this issue is the “context effect.” What employees think about, remember and care about at any given moment is heavily influenced by the specific context in which they find themselves. People literally see the world differently from one moment to the next as a result of the social, physical, mental and emotional factors that are most salient at the time. The key question becomes, “What factors in employees’ production contexts play the most significant role in inhibiting intervention?” While there are many, and they vary from one company to the next, I would like to introduce four common factors in employees’ production contexts:

THE UNIT BIAS

Think about a time when you were focused on something and realized that you should stop to deal with a different, more significant problem, but decided to stick with the original task anyway? That is the unit bias. It is a distortion in the way we view reality. In the moment, we perceive that completing the task at hand is more important than it really is, and so we end up putting off things that, outside of the moment, we would recognize as far more important. Now imagine that an employee is focused on a task and sees a coworker doing something unsafe. “I’ll get to it in a minute,” he thinks to himself.

BYSTANDER EFFECT

This is a a well documented phenomenon, whereby we are much less likely to intervene or help others when we are in a group. In fact, the more people there are, the less likely we are to be the ones who speak up.

DEFERENCE TO AUTHORITY

When we are around people with more authority than us, we are much less likely to be the ones who take initiative to deal with a safety issue. We refrain from doing what we believe we should, because we subtly perceive such action to be the responsibility of the “leader.” It is a deeply-embedded and often non-conscious aversion to insubordination: When a non-routine decision needs to be made, it is to be made by the person with the highest position power.

PRODUCTION PRESSURE

When we are under pressure to produce something in a limited amount of time, it does more than make us feel rushed. It literally changes the way we perceive our own surroundings. Things that might otherwise be perceived as risks that need to be stopped are either not noticed at all or are perceived as insignificant compared to the importance of getting things done. In addition to these four, there are other forces in employees’ production contexts that inhibit them when they should speak up. If we're are going to get people to speak up more often, we need to move beyond “Stop Work Authority” and get over the assumption that motivating them will be enough. We need to help employees understand what is inhibiting them in the moment, and then give them the skills to overcome these inhibitors so that they can do what they already believe is right - speak up to keep people safe.

Safety Intervention: A Dynamic Solution to Complex Safety Problems

If your organization is like many that we see, you are spending ever increasing time and energy developing SOPs, instituting regulations from various alphabet government organizations, buying new PPE and equipment, and generally engineering your workplace to be as safe as possible. While this is both invaluable and required to be successful in our world today, is it enough? The short answer is “no”. These things are what we refer to as mechanical and procedural safeguards and are absolutely necessary but also absolutely inadequate. You see, mechanical and procedural safeguards are static, slow to change, and offer limited effectiveness while our workplaces are incredibly complex, dynamic, and hard to predict. We simply can’t create enough barriers that can cover every possible hazard in the world we live in. In short, you have to do it but you shouldn’t think that your job stops there. For us to create safety in such a complex environment we will have to find something else that permeates the organization, is reactive, and also creative. The good news is that you have the required ingredient already…..people. If we can get our people to speak up effectively when they see unsafe acts, they can be the missing element that is everywhere in your organization, can react instantly, and come up with creative fixes. But can it be that easy? Again, the short answer is “no”.

In 2010 we completed a large scale and cross-industry study into what happens when someone observes another person engaged in an unsafe action. We wanted to know how often people spoke up when they saw an unsafe act. If they didn’t speak up, why not? If they did speak up how did the other person respond? Did they become angry, defensive or show appreciation? Did the intervention create immediate behavior change and also long term behavior change, and much more? I don’t have the time and space to go into the entire finding of our research (EHS Today Article) , just know that people don’t speak up very often (39% of the time) and when they do speak up they tend to do a poor job. If you take our research findings and evaluate them in light of a long history of research into cognitive biases (e.g. the fundamental attribution error, hindsight bias, etc.) that show how humans tend to be hardwired to fail when the moment of intervention arises we know where the 61% failure rate of speaking up comes from…… it’s human nature.

We decided to test a theory and see if we could fight human nature simply by giving front line workers a set of skills to intervene when they did see an unsafe action by one of their coworkers. We taught them how to talk to the person in such a way that they eliminated defensiveness, identified the actual reasons for why the person did it the unsafe way, and then ultimately found a fix to make sure the behavior changed immediately and sustainably. We wanted to know if simply learning these skills made it more likely that people would speak up, and if they did would that 90 second intervention be dynamic and creative enough to make immediate and sustainable behavior change. What we found in one particular company gave us our answer. Simply learning intervention skills made their workforce 30% more likely to speak up. Just knowing how to talk to people made it more likely that people didn’t fall victim to the cognitive biases that I mentioned earlier. And when they did speak up, behavior changes were happening at a far great rate and lasting much longer that they ever did previously, which helped result in a 57% reduction in Total Recordable Incident Rate (TRIR) and an 89% reduction in severity rates.

I would never tell a safety professional to stop working diligently on their mechanical and procedural barriers, they should be a significant component of the foundation on which safety programs are built. However, human intervention should be the component that holds that program together when things get crazy out in the real world. It can be as simple as helping your workers understand their propensity for not intervening and then giving them the ability and confidence to speak up when they do see something unsafe.

Human Error and Complexity: Why your “safety world view” matters

Have you ever thought about or looked at pictures of your ancestors and realized, “I have that trait too!” Just like your traits are in large part determined by random combinations of genes from your ancestry, the history behind your safety world view is probably largely the product of chance - for example, whether you studied Behavioral Psychology or Human Factors in college, which influential authors’ views you were exposed to, who your first supervisor was, or whether you worked in the petroleum, construction or aeronautical industry. Our “Safety World View” is built over time and dramatically impacts how we think about, analyze and strive to prevent accidents.

Linear View - Human Error

Let’s briefly look at two views - Linear and Systemic - not because they are the only possible ones, but because they have had and are currently having the greatest impact on the world of safety. The Linear View is integral in what is sometimes referred to as the “Person Approach,” exemplified by traditional Behavior Based Safety (BBS) that grew out of the work of B.F. Skinner and the application of his research to Applied Behavioral Analysis and Behavior Modification. Whether we have thought of it or not, much of the industrial world is operating on this “linear” theoretical framework. We attempt to understand events by identifying and addressing a single cause (antecedent) or distinct set of causes, which elicit unsafe actions (behaviors) that lead to an incident (consequences). This view impacts both how we try to change unwanted behavior and how we go about investigating incidents. This behaviorally focused view naturally leads us to conclude in many cases that Human Error is, or can be, THE root cause of the incident. In fact, it is routinely touted that, “research shows that human error is the cause of more than 90 percent of incidents.” We are also conditioned and “cognitively biased” to find this linear model so appealing. I use the word “conditioned” because it explains a lot of what happens in our daily lives, where situations are relatively clean and simple…..so we naturally extend this way of thinking to more complex worlds/situations where it is perhaps less appropriate. Additionally, because we view accidents after the fact, the well documented phenomenon of “hindsight bias” leads us to linearly trace the cause back to an individual, and since behavior is the core of our model, we have a strong tendency to stop there. The assumption is that human error (unsafe act) is a conscious, “free will” decision and is therefore driven by psychological functions such as complacency, lack of motivation, carelessness or other negative attributes. This leads to the also well-documented phenomenon of the Fundamental Attribution Error, whereby we have a tendency to attribute failure on the part of others to negative personal qualities such as inattention, lack of motivation, etc., thus leading to the assignment of causation and blame. This assignment of blame may feel warranted and even satisfying, but does not necessarily deal with the real “antecedents” that triggered the unsafe behavior in the first place. As Sidney Dekker stated, “If your explanation of an accident still relies on unmotivated people, you have more work to do."

Systemic View - Complexity

In reality, most of us work in complex environments which involve multiple interacting factors and systems, and the linear view has a difficult time dealing with this complexity. James Reason (1997) convincingly argued for the complex nature of work environments with his “Swiss Cheese” model of complexity. In his view, accidents are the result of active failures at the “sharp end” (where the work is actually done) and “latent conditions,” which include many organizational decisions at the “blunt end” (higher management) of the work process. Because barriers fail, there are times when the active failures and latent conditions align, allowing for an incident to occur. More recently Hollnagel (2004) has argued that active failures are a normal part of complex workplaces because of the requirement for individuals to adapt their performance to the constantly changing environment and the pressure to balance production and safety. As a result, accidents “emerge” as this adaptation occurs (Hollnagel refers to this adaptive process as the “Efficiency Thoroughness Trade Off”) . Dekker (2006) has recently added to this view the idea that this adaptation is normal and even “locally rational” to the individual committing the active failure because he/she is responding to a context that may not be apparent to those observing performance in the moment or investigating a resulting incident. Focusing only on the active failure as the result of “human error” is missing the real reasons that it occurs at all. Rather, understanding the complex context that is eliciting the decision to behave in an “unsafe” manner will provide more meaningful information. It is much easier to engineer the context than it is to engineer the person. While a person is involved in almost all incidents in some manner, human error is seldom the “sufficient” cause of the incident because of the complexity of the environment in which it occurs. Attempting to explain and prevent incidents from a simple linear viewpoint will almost always leave out contributory (and often non-obvious) factors that drove the decision in the first place and thus led to the incident.

Why Does it Matter?

Thinking of human error as a normal and adaptive component of complex workplace environments leads to a different approach to preventing the incidents that can emerge out of those environments. It requies that we gain an understanding of the many and often surprising contextual factors that can lead to the active failure in the first place. If we are going to engineer safer workplaces, we must start with something that does not look like engineering at all - namely, candid, informed and skillful conversations with and among people throughout the organization. These conversations should focus on determining the contextual factors that are driving the unsafe actions in the first place. It is only with this information that we can effectively eliminate what James Reason called “latent conditions” that are creating the contexts that elicit the unsafe action in the first place. Additionally, this information should be used in the moment to eliminate active failures and also allowed to flow to decision makers at the “blunt end”, so that the system can be engineered to maximize safety. Your safety world view really does matter.

Safety Culture Shift: Three Basic Steps

In the world of safety, culture is a big deal. In one way or another, culture helps to shape nearly everything that happens within an organization - from shortcuts taken by shift workers to budget cuts made by managers. As important as it is, though, it seems equally as confusing and intractable. Culture appears to emerge as an unexpected by-product of organizational minutia: A brief comment made by a manager, misunderstood by direct-reports, propagated during water cooler conversations, and compounded with otherwise unrelated management decisions to downsize, outsource, reassign, promote, terminate… Safety culture can either grow wild and unmanaged - unpredictably influencing employee performance and elevating risk - or it can be understood and deliberately shaped to ensure that employees uphold the organization’s safety values.

Pin it Down

The trick is to pin it down. A conveniently simple way of capturing the idea of culture is to say that it is the “taken-for-granted way of doing things around here;” but even this is not enough. If we can understand the mechanics that drive culture, we will be better positioned to shift it in support of safety. The good news is that, while presenting itself as extraordinarily complicated, culture is remarkably ordinary at its core. It is just the collective result of our brains doing what they always do.

Our Brains at Work

Recall the first time that you drove a car. While you might have found it exhilarating, it was also stressful and exhausting. Recall how unfamiliar everything felt and how fast everything seemed to move around you. Coming to a four-way stop for the first time, your mind was racing to figure out when and how hard to press the brake pedal, where the front of the car should stop relative to the stop sign, how long you should wait before accelerating, which cars at the intersection had the right-of-way, etc. While we might make mistakes in situations like this, we should not overlook just how amazing it is that our brains can take in such a vast amount of unfamiliar information and, in a near flash, come up with an appropriate course of action. We can give credit to the brain’s “executive system” for this.

Executive or Automatic?

But this is not all that our brains do. Because the executive system has its limitations - it can only handle a small number of challenges at a time, and appears to consume an inordinate amount of our body’s energy in doing so - we would be in bad shape if we had to go through the same elaborate and stressful mental process for the rest of our lives while driving. Fortunately, our brains also “automate” the efforts that work for us. Now, when you approach a four-way-stop, your brain is free to continue thinking about what you need to pick up from the store before going home. When we come up with a way of doing something that works - even elaborate processes - our brains hand it over to an “automatic system.” This automatic system drives our future actions and decisions when we find ourselves in similar circumstances, without pestering the executive system to come up with an appropriate course of action.

Why it Matters

What does driving have to do with culture? Whatever context we find ourselves in - whether it is a four-way-stop or a pre-job planning meeting - our brains take in the range of relevant information, come up with an effective course of action, try it out and, when it works, automate it as “the way to do things in this situation.”

For Example

Let’s imagine that a young employee leaves new-hire orientation with a clear understanding of the organization’s safety policies and operating procedures. At that moment, assuming that he wants to succeed within the organization, he believes that proactively contributing during a pre-job planning meeting will lead to recognition and professional success.

Unfortunately, at many companies, the actual ‘production’ context is quite different than the ‘new-hire orientation’ context. There are hurried supervisors, disinterested ‘old timers’, impending deadlines and too little time, and what seemed like the right course of action during orientation now looks like a sure-fire way to get ostracized and opposed. His brain’s “executive system” quickly determines that staying quiet and “pencil whipping” the pre-job planning form like everyone else is a better course of action; and in no time, our hapless new hire is doing so automatically - without thinking twice about whether it is the right thing to do.

Changing Culture

If culture is the collective result of brains figuring out how to thrive in a given context, then changing culture comes down to changing context - changing the “rules for success.” If you learned to drive in the United States but find yourself at an intersection in England, your automated way of driving will likely get you into an accident. When the context changes, the executive system has to wake up, find a new way to succeed given the details of the new context, and then automate that for the future.

How does this translate to changing a safety culture? It means that, to change safety culture, we need to change the context that employees work in so that working safely and prioritizing safety when making decisions leads to success.

Three Basic Steps:

Step 1

Identify the “taken-for-granted” behaviors that you want employees to adopt. Do you want employees to report all incidents and near-misses? Do you want managers to approve budget for safety-critical expenditures?

This exercise amounts to defining your safety culture. Avoid the common mistake of falling back on vague, safety-oriented value statements. If you aren’t specific here, you will not have a solid foundation for the next two steps.

Step 2

Analyze employees’ contexts to see what is currently inhibiting or competing against these targeted, taken-for-granted behaviors. Are shift workers criticized or blamed by their supervisors for near-misses? Are the managers who cut cost by cutting corners also the ones being promoted?

Be sure to look at the entire context. Often times, factors like physical layout, reporting structure or incentive programs play a critical role in inhibiting these desired, taken-for-granted behaviors.

Step 3

Change the context so that, when employees exhibit the desired behaviors that you identified in Step 1, they are more likely to thrive within the organization.

“Thriving” means that employees receive recognition, satisfy the expectations of their superiors, avoid resistance and alienation, achieve their professional goals, and avoid conflicting demands for their time and energy, among other things.

Give It a Try

Shifting culture comes down to strategically changing the context that people find themselves in. Give it a try and you might find that it is easier than you expected. You might even consider trying it at home. Start at Step 1; pick one simple "taken-for-granted" behavior and see if you can get people to automate this behavior by changing their context. If you continue the experiment and create a stable working context that consistently encourages safe performance, working safely will eventually become "how people do things around here."

The Human Factor - Missing from Behavior Based Safety

Since the early 1970’s, there has been an interest in the application of Applied Behavioral Analysis (ABA) techniques to the improvement of safety performance in the workplace. The pioneering work of B.F. Skinner on Operant Conditioning in the 1940’s, 50’s and 60’s led to a focus on changing unsafe behavior using observation and feedback techniques. Thousands of organizations have attempted to use various aspects of ABA to improve safety with various levels of success. This approach (referred to as Behavior Based Safety, or BBS) typically attempts to increase the chances that desired “safe” behavior will occur in the future by first identifying the desired behavior, observing the performance of individuals in the workplace and then applying positive reinforcement (consequences) following the desired behavior. The idea is that as safe behavior is strengthened, unsafe behavior will disappear (“extinguish”).

The Linear View

Traditionally, incidents/accidents have been viewed as a series of cause and effect events that can be understood and ultimately prevented by interrupting the chain of events in some way. With this “Linear” view of accident causation, there is an attempt to identify the root cause of the incident, which is often determined to be some form of “Human Error” due to an unsafe action. The Linear view can be depicted as follows:

Event “A” (Antecedent) → Behavior “B” → Undesired Event → Consequence “C”

Driven by the views of Skinner and others, Behavioral Psychology and BBS have been concerned exclusively with what can be observed. The issue is that, while people do behave overtly, they also have “cognitive” capacity to observe their environment, think about it and make calculated decisions about how to behave in the first place. While Behavioral Psychologists acknowledge that this occurs, they argue that the “causes” of performance can be explained through an analysis of the Antecedents within the environment. However, since they also take a linear view, they tend to limit the “causal” antecedent to a single source known as the “root cause”.

Human Factors

The field of Human Factors Psychology has provided a body of research that has demonstrated that many, if not most, accidents evolve out of complex systems that are not necessarily linear. Some researchers call this a “Systemic” view of incidents. The argument is that incidents occur in complex environments, characterized as involving multiple interacting systems rather than just simple linear events. That is, multiple interacting events (Antecedents) combine to create the “right” context to elicit the behavior that follows.

In such complex environments, individuals are constantly evaluating multiple contextual factors to allow them to make decisions about how to act, rather than simply responding to single Antecedents that happen to be present. In this view, the decision to act in a specific (safe or unsafe) manner is directed by sources of information, some of which are only available to the individual and not obvious to on-lookers or investigators who attempt to determine causation following an incident.

Local Rationality

This is referred to as “Local Rationality” because the decision to act in a certain way makes perfect sense to the individual in the local context given the information that he has in the moment. The local rationality principle says that people do what makes sense given the situation, operational pressures and organizational norms in which they find themselves.

People don’t want to get hurt, so when they do something unsafe, it is usually because they are either not aware that what they are doing is unsafe, they don’t recognize the hazard, or they don’t fully realize the risk associated with what they are doing. In some cases they may be aware of the risk, but because of other contextual factors, they decide to act unsafely anyway. (Have you ever driven over the speed limit because you were late for an appointment?) The key here is developing an understanding of why the individual made or is making the decision to behave in a particular way.

A More Complete Understanding

We believe that the most fruitful way to understand this is to bring together the rich knowledge provided by behavioral research and human factors (including cognitive & social psychological) research to create a more complete understanding of what goes on when people make decisions to take risks and act in unsafe ways. We believe it is time to put the Human Factor into Behavior Based Safety.

Unsafe Behavior Is a Downstream Indicator

At first glance, the suggestion that behavior is a “downstream” indicator may seem ridiculous, because in the world of safety and accident prevention, behavior is almost universally viewed as an “upstream or leading” indicator. The more unsafe behaviors that are occurring, the more likely you are to have an undesired event and thus an increase in incident rate (downstream or lagging indicator). This view is the basis for most “behavior based safety” programs.

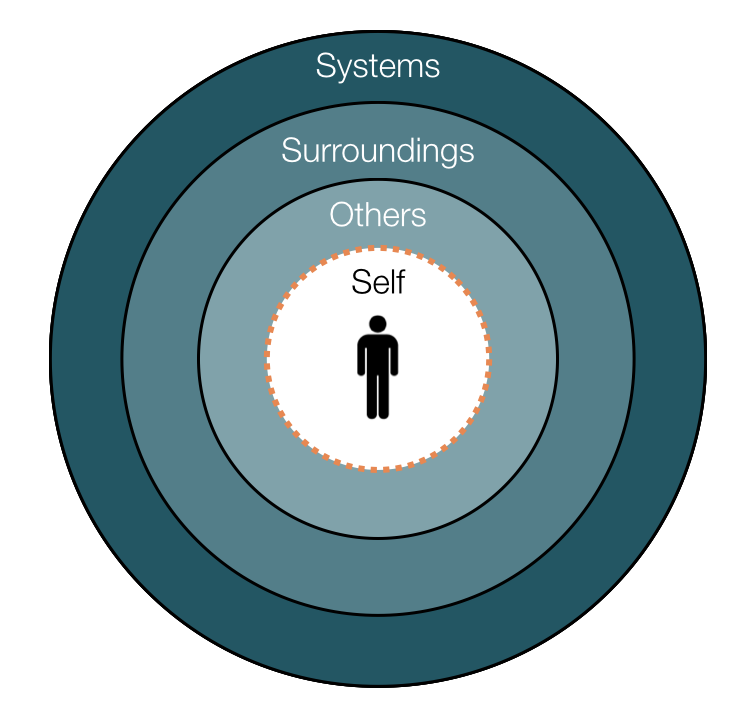

Over the past few years, however, there has been a great deal of research in the area of human factors which suggests that there are variables much more upstream than behavior that can help us decrease the chances of an incident. The human factors approach views an individual’s behavior as a component of a much more complex system which includes contextual factors such as social (supervisory and peer) climate, organizational climate (rules, values, incentives, etc.), environment climate (weather, equipment, signage, etc.), and regulatory climate (OSHA, BOEMRE, etc.). Individuals work within these climates, evaluate action based on their interpretation of these climates and then act based on that evaluation.

Research has shown that individuals, for the most part make rational decisions based on the information that they have at their disposal in the moment. If an individual “understands” that her boss really rewards speed, then she is more likely to pick up speed even if she is not capable of working at that speed and thus increases the likelihood of having an incident. While speed of performance is a behavior, it is the result of the person’s knowledge of the demands of the climate and is therefore a downstream indicator. Evaluating and impacting the climate is thus more upstream and should be the focus of our intervention programs. When we can impact the decision making process (upstream) we can have a much better chance of creating safe/desired behavior (downstream).

The Safety Side Effect

Things Supervisors do that, Coincidentally, Improve Safety

Common sense tells us that leaders play a special role in the performance of their employees, and there is substantial research to help us understand why this is the case. For example, Stanley Milgram’s famous studies of obedience in the 1960s demonstrated that, to their own dismay, people will administer what they think are painful electric shocks to strangers when asked to do so by an authority figure. This study and many others reveal that leaders are far more influential over the behavior of others than is commonly recognized.

In the workplace, good leadership usually translates to better productivity, efficiency and quality. Coincidentally, as research demonstrates, leaders whose teams are the most efficient and consistently productive also usually have the best safety records. These leaders do not necessarily “beat the safety drum” louder than others. They aren’t the ones with the most “Safety First” stickers on their hardhats or the tallest stack of “near miss” reports on their desks; rather, their style of leadership produces what we call the “Safety Side Effect.” The idea is this: Safe performance is a bi-product of the way that good leaders facilitate and focus the efforts of their subordinate employees. But what, specifically, produces this effect?

Over a 30 year period, we have asked thousands of employees to describe the characteristics of their best boss - the boss who sustained the highest productivity, quality and morale. This “Best Boss” survey identified 20 consistently recurring characteristics, which we described in detail during our 2012 Newsletter series. On close inspection, one of these characteristic - “Holds Himself and Others Accountable for Results” - plays a significant role in bringing about the Safety Side Effect. Best bosses hold a different paradigm of accountability. Rather than viewing accountability as a synonym for “punishment,” these leaders view it as an honest and pragmatic effort to redirect and resolve failures. When performance failure occurs, the best boss...

- consistently steps up to the failure and deals with it immediately or as soon as possible after it occurs;

- honestly explores the many possible reasons WHY the failure occurred, without jumping to the simplistic conclusion that it was one person’s fault; and

- works with the employee to determine a resolution for the failure.

When a leader approaches performance failure in this way, it creates a substantially different working environment for subordinate employees - one in which employees:

- do not so quickly become defensive when others stop their unsafe behavior

- focus more on resolving problems than protecting themselves from blame, and

- freely offer ideas for improving their own safety performance.

Can you work incident free without the use of punishment?

I was speaking recently to a group of mid-level safety professionals about redirecting unwanted behaviors and making change within individual and systemic safety systems. I had one participant who was particularly passionate about his views on changing the behaviors of workers. According to him, one cannot be expected to change behavior or work incident free without at least threatening the use of punitive actions. In his own words, “you cannot expect them to work safely if you can’t punish them for not working safely.” He was also quite vocal in his assertion that it is of little use to determine which contextual factors are driving an unsafe behavior. Again quoting him, “why do I need to know why they did it unsafely? If they can’t get it done, find somebody that can.”

I meet managers like this from time-to-time and I’m immediately driven to wonder what it must be like to work for such a person. How could a person like this have risen in the ranks of his corporate structure? How could such an idiot...oh,wait. Am I not making the same mistakes that I now, silently scold him for? You see, when people do things that we see as evil, stupid, or just plain wrong, there are two incredibly common and powerful principles at play. The first principle is called the Fundamental Attribution Error (FAE) and, if allowed to take over one’s thought process, it will make a tyrant out of the most pleasant of us. The FAE says that when we see people do things that we believe to be undesirable, we attribute it to them as being flawed in some way or to them having bad intentions. They are stupid, evil, heartless, or just plain incompetent. If we assume these traits to be the driving factor of an unsafe act and we have organizational power, we will likely move to punish this bad actor for their evil doings. After all, somebody so (insert evil adjective here) deserves to be punished. The truth is that most people are good and decent people who just want to do a good job.

Context Matters

This leads us to our second important principle, Local Rationality. Local Rationality says that when good and decent people do things that are unsafe or break policies or rules, they usually do it without any ill-intent. In fact, because of their own personal context, they do it because it makes sense to them to do it that way; hence the term “local rationality”. As a matter of fact, had you or I been in their situation, given the exact same context, chances are we would have done the same thing. It isn’t motive that normally needs to be changed, it’s context.

With this knowledge, let’s look back at the two questions from our Safety Manager.

- “How can I be expected to change behavior or work incident free, without threatening to to punish the wrong-doers?” and

- “Why do I need to know why they did it unsafely? If they can’t get it done, find somebody that can.”

Once we understand that, in general, people don’t knowingly and blatantly do unsafe things or break rules, rather that they do it because of a possibly flawed work system, e.g. improper equipment, pressure from others, lack of training, etc., then we have the ability to calmly have a conversation to determine why they did what they did. In other words, we determine the context that drove the person to rush, cut corners, use improper tools, etc. Once we know why they did it, we then have a chance of creating lasting change by changing the contextual factors that led to the unsafe act.

Your key take-aways:

- When you see what you think is a pile of stupidity, be curious as to where it came from. Otherwise, you may find yourself stepping in it yourself.

- Maybe it wasn’t stupidity at all. Maybe it was just the by-product of the context in which they work. Find a fix together and you may both come out smelling like roses.

Consequences of Not Speaking Up

What we learned upon completing a large-scale (3,000+ employees) study of safety interventions is that employees directly intervene in only about two of five unsafe actions and conditions that they observe in the workplace. The obvious concern is that a significant number of unsafe operations that could be stopped are not, which increases the likelihood of incidents and injuries; but this statistic is troubling for a less obvious reason - its cultural implication.

The influence of culture on safe and unsafe employee behavior is of such concern that regulatory bodies, like OSHA in the U.S. and the Health and Safety Executive (HSE) in the U.K., have strongly encouraged organizations to foster “positive safety cultures” as part their overall safety management programs.

Employees are inclined to behave in a way that they perceive to be congruent (consistent) with the social values and expectations, or “norms,” that constitute their organization’s culture. These behavioral norms are largely established through social interaction and communication, and in particular through the ways that managers and supervisors instruct, reward and allocate their attention around employees. When supervisors and opinion leaders in organizations infrequently or inconsistently address unsafe behavior, it leads employees to believe that formal safety standards are not highly valued and employees are not genuinely expected to adhere to them. In short, the low frequency of safety interventions in the workplace contributes to a culture in which employees are not positively influenced to work safely.

These two implications – (1) that a significant number of unsafe operations are not being stopped, and (2) that safety culture is diminished – compound to create a problematic state of affairs. Employees are more likely to act unsafely in organizations with diminished safety cultures, yet their unsafe behavior is less likely to be stopped in those organizations.

(Look for the full-length article in the May/June 2011 edition of EHS Today.)