Risk-Taking and Sense-Making Risk tolerance is a real challenge for nearly all of us, whether we are managing a team in a high-risk environment or trying to get a teenager to refrain from using his cellphone while driving. It is also, unfortunately, a somewhat complicated matter. There are plenty of moving parts. Personalities, past experiences, fatigue and mood have all been shown to affect a person’s tolerance for risk. Apart from trying to change individuals’ “predispositions” toward risk-taking, there is a lot that we can do to help minimize risk tolerance in any given context. The key, as it turns out, is to focus our efforts on the context itself.

If you have followed our blog, you are by now familiar with the idea of “local rationality,” which goes something like this: Our actions and decisions are heavily influenced by the factors that are most obvious, pressing and significant (or, “salient”) in our immediate context. In other words, what we do makes sense to us in the moment. When was the last time you did something that, in retrospect, had you mumbling to yourself, “What was I thinking?” When you look back on a previous decision, it doesn’t always make sense because you are no longer under the influence of the context in which you originally made that decision.

What does local rationality have to do with risk tolerance? It’s simple. When someone makes a decision to do something that he knows is risky, it makes sense to him given the factors that are most salient in his immediate context.

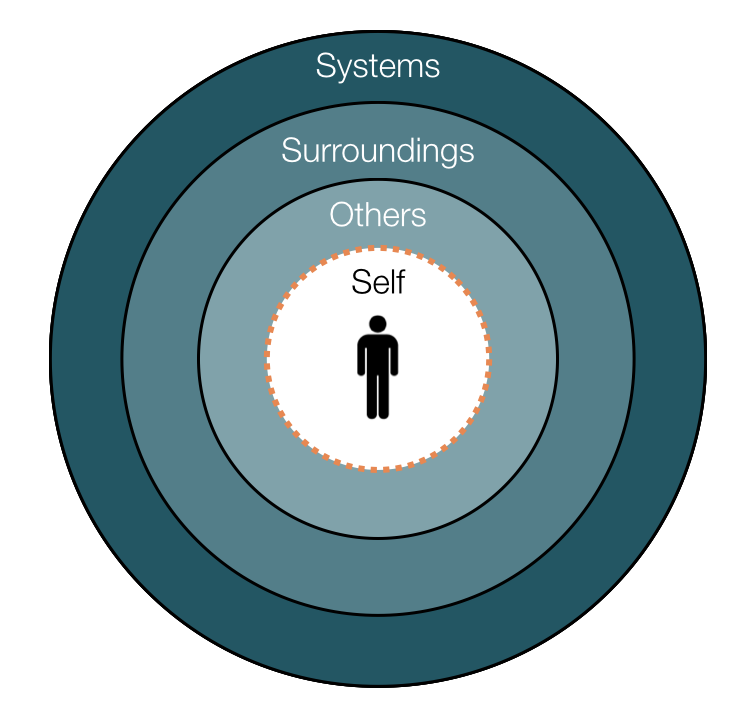

If we want to help others be less tolerant of risk, we should start by understanding which factors in a person’s context are likely to lead him to think that it makes sense to do risky things. There are many factors, ranging from the layout of the physical space to the structure of incentive systems. Some are obvious; others are not. Here are a couple of significant but often overlooked factors.

Being in a Position of Relative Power

If you have a chemistry set and a few willing test subjects, give this experiment a shot. Have two people sit in submissive positions (heads downcast, backs slouched) and one person stand over them in a power position (arms crossed, towering and glaring down at the others). After only 60 seconds in these positions, something surprising happens to the brain chemistry of the person in the power position. Testosterone (risk tolerance) and cortisol (risk-aversion) levels change, and this person is now more inclined to do risky things. That’s right; when you are in a position of power relative to others in your context, you are more risk tolerant.

There is an important limiting factor here, though. If the person in power also feels a sense of responsibility for the wellbeing of others in that context, the brain chemistry changes and he or she becomes more risk averse. Parents are a great example. They are clearly in a power-position relative to their children, but because parents are profoundly aware of their role in protecting their children, they are less likely to do risky things.

If you want to limit the effects of relative power-positioning on certain individuals’ risk tolerance - think supervisors, team leads, mentors and veteran employees - help them gain a clear sense of responsibility for the wellbeing of others around them.

Authority Pressure

On a remote job site in West Texas, a young laborer stepped over a pressurized hose on his way to get a tool from his truck. Moments later, the hose erupted and he narrowly avoided a life-changing catastrophe. This young employee was fully aware of the risk of stepping over a pressurized hose, and under normal circumstances, he would never have done something so risky; but in that moment it made sense because his supervisor had just instructed him with a tone of urgency to fetch the tool.

It is well documented that people will do wildly uncharacteristic things when instructed to do so by an authority figure. (See Stanley Milgram’s “Study of Obedience”.) The troubling part is that people will do uncharacteristically dangerous things - risking life and limb - under the influence of minor and even unintentional pressure from an authority figure. Leaders need to be made aware of their influence and unceasingly demonstrate that, for them, working safely trumps other commands.

A Parting Thought

There is certainly more to be said about minimizing risk tolerance, but a critical first step is to recognize that the contexts in which people find themselves, which are the very same contexts that managers, supervisors and parents have substantial control over, directly affect people’s risk tolerance.

So, with that “trouble” employee / relative / friend / child in mind, think to yourself, how might their context lead them to think that it makes sense to do risky things?